The DARPA Urban Challenge created an unprecedented concentration of ambition, intelligence, technology, and money in an ecosystem that advanced autonomous mobility and related technologies by decades.

One such advancement was with the ibeo Lux Laser Scanning system. ibeo is a subsidiary of SICK, veteran makers of basic-but-pervasive laser scanning technology. ibeo sponsored Team Lux which was led by Director of Technology, Holger Salow with whom Robot Central had an opportunity to chat.

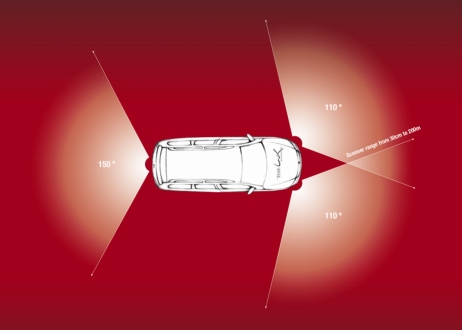

What was most striking about Lux’s vehicle was the apparent absence of sensors of any kind. In the photo, Salow is pointing to a translucent shield that is hiding the robot’s primary sensor technology–the ibeo Lux. The team’s Volkswagen Passat has three of these sensors, one on each side of the front of the vehicle and one in the rear. The following graphic from ibeo illustrates the configuration. The only other sensing technology the robot has is a GPS and Intertial Management Unit (IMU).

Salow expressed how deliberate the team was in making the vehicle consumer friendly. And it was. Except for an emergency stop button on the console, there was no indication within and about the vehicle that revealed the technology that lay within. “We use it to do our shopping and drive around,” Salow said of the car. Computer devices were neatly stowed in hidden compartments, wiring was well hidden, and the ibeo sensors were all but invisible. The robot appeared more than anything to showcase how the ibeo Lux technology could be physically integrated in a 3rd-party product.

Sarlow then began to explain the innards of the technology itself. He explained that the ibeo Lux sensor has four lasers configured vertically to cover a vertical “stripe” of 3.2° that is swept across a 100° angle identifying obstacles up to 200 meters away. With a 3.2° vertical scan, the ibeo Lux has effectively increased the thickness of the planar scan of its parent company’s (SICK) flagship scanning products.

The primary differentiator between the ibeo Lux and other mobile laser scanning systems is the software system architecture that builds on the primitive data returned by the sensor. The sensor merely provides a list of points within the sensor’s angular field of view. ibeo has built on this information to provide higher levels of abstraction to application developers.

The first software incarnation of the sensor is the data it returns which represents a set of points–each an indicator of the distance and angle from the sensor as was reflected by an object. Darker objects return a weaker signal while brighter or more reflective objects yield stronger signals. It is this principle that allows the software to identify stripes in the road. ibeo has an algorithm that evaluates four measurements per sample in order to “see through” rain, fog, or dust.

The second layer is an object recognition layer. This layer clusters points returned by the sensor and identifies types of objects such as other vehicles or pedestrians. Beyond that is an object tracking layer that monitors up to 64 objects and their location, direction, and speed.

The highest layer is the application layer which leverages the foundation layers to implement high-level behaviors such as Adaptive Cruise Control (ACC), Pedestrian Avoidance, Stop and Go, and other driver-assisting applications.

The ibeo Lux is currently in prototype form and is expected to start production in October 2008. ibeo expects to produce approximately 3,000 units a year at a little over € 9,000 EUR or about $ 13,132 USD. Once the product hits mass production the unit cost is expected to drop to € 180 EUR according to Marketing Director Tanja Veeser.

The predecessor to the ibeo Lux is called the Alasca and was widely used in the DARPA Urban Challenge; however, in a poll of the top four teams (MIT would have been fourth according to Dr. Tony Tether) conducted by Robot Central, none used the higher-level functions made available by ibeo and none except one ranked the sensor in their list of top three most important sensors.

This is likely due to the fact that the implementation of the higher-level software intelligence and data analysis was precisely the point of the Urban Challenge. Each team believed they had the best way of doing it. When the software is removed from the ibeo equation, there is little more than a 3.2° vertical scan across a 100° sweep. ibeo’s biggest competitor, Velodyne, did not invest in a software stack and instead provided a substantially more robust and complete visualization of the world around the robot.

The strongest proponent of the ibeo technology was third-place winner Victor Tango of Virginia Tech. Team Leader Dr. Charles Reinholtz explained that all the systems were critical to the operation of their robot and he was very satisfied with its performance. When pressed to rank the priority of his sensors, he ranked the ibeo as #2 behind GPS.

As with all cutting edge technologies ibeo had its share of quirkiness. Dr. Reinholtz explained that they programmed the robot to stop and reboot the system whenever it started acting up. They experienced one such event during the final race where the robot sat for 90 seconds while it rebooted its ibeo sensors.

The DARPA Urban Challenge it seems wasn’t the best place to showcase the ibeo technology because the value of the ibeo software was undermined by contestants’ desire to implement their own approaches to solving the same problems; however, in the marketplace, ibeo has designed a formidable player in the Digital Horsepower economy. It is easy to envision this technology being embedded in every vehicle that rolls off the assembly line. Things like Adapative Cruise Control and brace-yourself Collision Avoidance systems will be made cheap and commonly available.