ByteDance Seed has introduced GR-Dexter, a comprehensive hardware-model-data framework for vision-language-action (VLA)-based generalist manipulation with a bimanual dexterous-hand robot.1 The system centers on the compact 21-DoF ByteDexter V2 hand, paired with intuitive teleoperation and a multi-source data recipe that delivers strong results on long-horizon tasks.1 Robot hands represent the crtical link to value delivery, distinguishing machines that merely navigate spaces from those capable of meaningful actions.

ByteDance Seed’s Robotics Focus

ByteDance Seed, the research arm behind GR-Dexter, drives advancements in robotics including dexterous manipulation and generalist VLA policies.4 This division also develops projects like GR-3 and GR-RL, underscoring a commitment to embodied AI.4 Their work emphasizes practical hardware alongside scalable training approaches.

The GR-Dexter framework integrates the bimanual setup of two ByteDexter V2 hands on dual Franka Research 3 arms, yielding a total of 56 DoF.1 Four RGB-D cameras provide coverage: one egocentric and three third-person views to track hand-object interactions effectively.1 This configuration supports detailed observation for VLA model training.

ByteDexter V2: Hardware Innovations

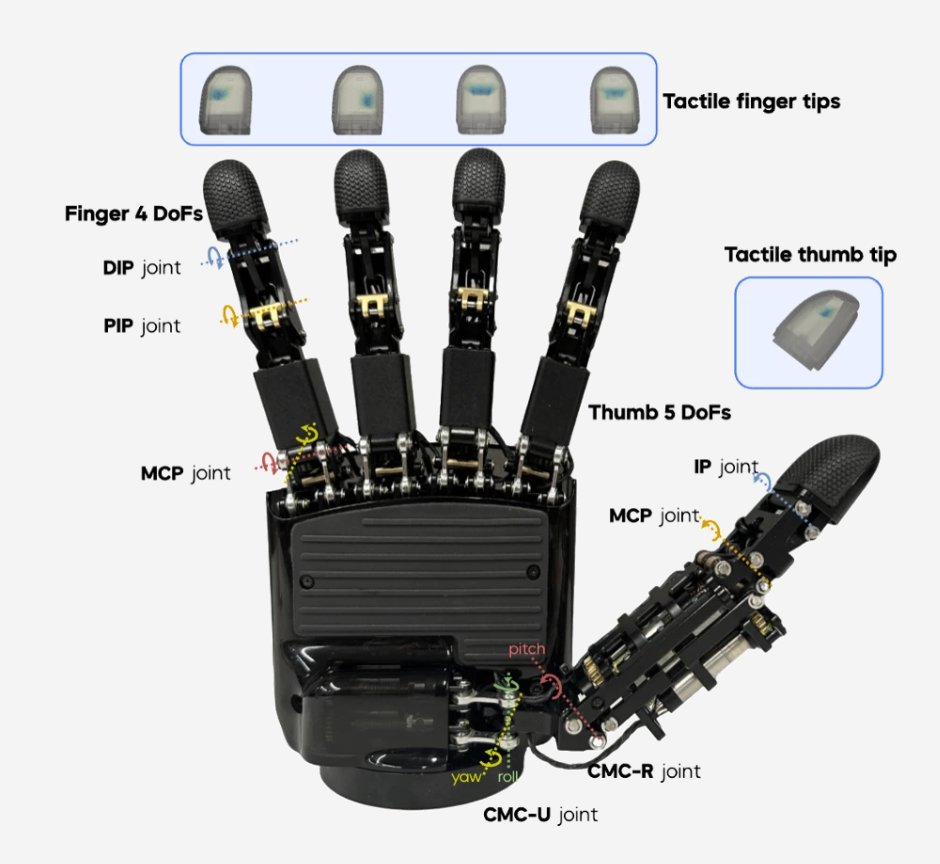

The ByteDexter V2 employs a linkage-driven design for its 21-DoF end-effector, mimicking human-like motion.2 A biomimetic underactuation mechanism couples distal interphalangeal joints to proximal ones through a four-bar linkage system.2 High-density piezoresistive tactile arrays in all five fingertips detect contact location and force.2

Such dexterous hands address core challenges in manipulation, as explored in prior robotics discussions.

Teleoperation Pipeline

Bimanual teleoperation leverages MANUS gloves with real-time retargeting via constrained optimization using Sequential Quadratic Programming.3 This includes wrist-to-fingertip alignment and collision avoidance for precise control of the two arms and hands.3 The approach facilitates data collection for high-DoF tasks over extended durations.

This method tackles data scarcity in bimanual robotics through scalable collection and cross-embodiment learning.2 Operators can handle long-horizon sequences simultaneously with both hands.

Performance on Manipulation Tasks

GR-Dexter excels in long-horizon everyday manipulation and generalizable pick-and-place in real-world settings.1 It shows improved robustness to unseen objects and instructions over baselines.1 Vision-language models combined with tactile sensing enable this dexterity in intelligence.

Evaluations confirm better generalization to novel scenarios, a key for practical deployment.1 The system’s design unlocks potential in unstructured environments.

Real-World Applications

Targeted at human-centric spaces, GR-Dexter handles tasks demanding high dexterity like object manipulation.2 Everyday handling scenarios benefit from its long-horizon capabilities.1 This positions it for uses in homes or factories requiring fine motor skills.

Paths Forward / Looking Ahead

GR-Dexter pairs practical dexterous hardware with scalable data strategies and cross-embodiment supervision, paving a path to generalist manipulation.1 Continued refinement in VLA policies will enhance adaptability to diverse instructions and objects. This framework serves as a foundational step, addressing gaps in high-DoF systems through efficient teleoperation and multi-view sensing.

Future iterations may integrate even denser sensing or faster retargeting to push boundaries further. As data scales, models could generalize across embodiments, reducing reliance on task-specific training. Ultimately, such systems bridge the divide between lab demos and reliable deployment in varied settings.

Leave a Reply